Projects

Working on two aspects of DBpedia Knowledge Graphs: (a) A framework to create an image-based knowledge graph out of existing DBpedia entries; (b) Using the graph created to perform tasks like image querying, text + image search, and using relevant input images to add more images to existing articles.

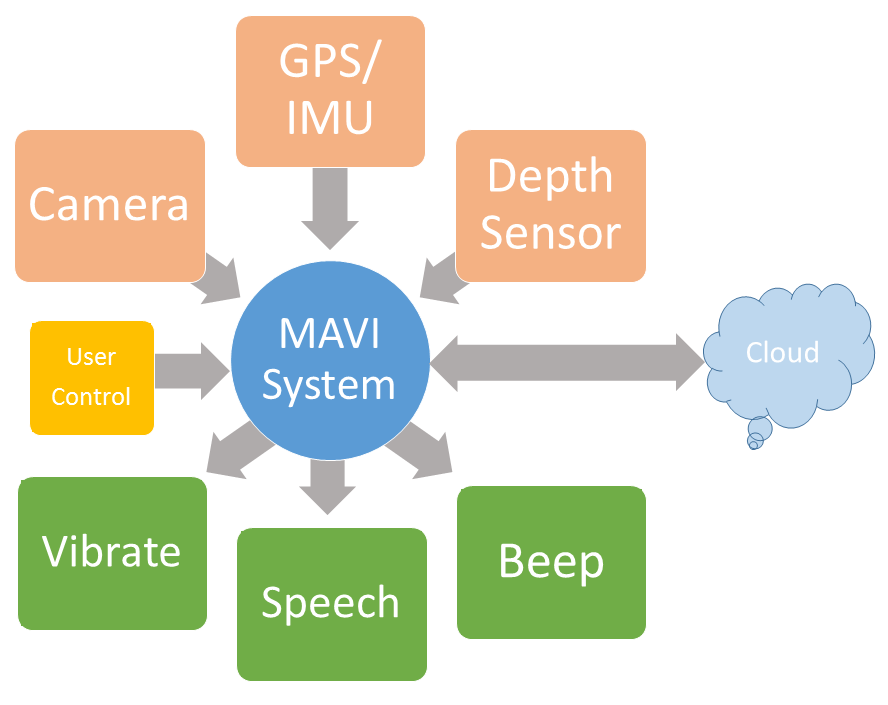

Working on detecting and recognising text in the images from Mobility Assistant for Visually Impaired (MAVI) developed at IIT-Delhi.

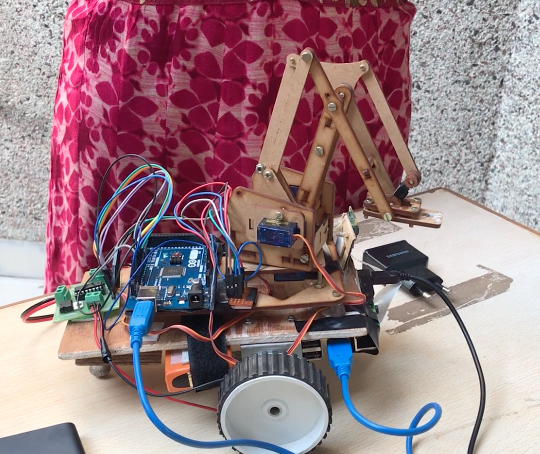

Lead a team of three for creating a robot using a RaspberryPi. It was capable of detecting waste bottles and picking them up.

Developed an algorithm for getting a rough estimate of the distance of the garbage from the robot (with an error margin of 2 cm).

Developed a path planning algorithm based on the concept of PID (by considering bottle as the centre).

One of the top 4% projects selected for demonstration at SSIP annual conference.

Created an end-to-end system for detecting smiling faces in a live video stream using Convolutional Neural Network.

Learned about Deep Q Learning by implementing it for driving a car autonomously.

In this project, I worked on autoencoders to learn the features from 1,40,000 images. Then using the trained autoencoder with added convolution layers to classify the anime to answer various questions with 74.6% accuracy like -

Does the image contain any nudity or sexual content? (Yes, No)

Is this an interesting image or not? (Yes, no).